Vector space

Did you know...

SOS Children produced this website for schools as well as this video website about Africa. A quick link for child sponsorship is http://www.sponsor-a-child.org.uk/

In mathematics, a vector space (or linear space) is a collection of objects (called vectors) that, informally speaking, may be scaled and added. More formally, a vector space is a set on which two operations, called (vector) addition and (scalar) multiplication, are defined and satisfy certain natural axioms which are listed below. Vector spaces are the basic objects of study in linear algebra, and are used throughout mathematics, science, and engineering.

The most familiar vector spaces are two- and three-dimensional Euclidean spaces. Vectors in these spaces are ordered pairs or triples of real numbers, and are often represented as geometric vectors which are quantities with a magnitude and a direction, usually depicted as arrows. These vectors may be added together using the parallelogram rule (vector addition) or multiplied by real numbers ( scalar multiplication). The behavior of geometric vectors under these operations provides a good intuitive model for the behaviour of vectors in more abstract vector spaces, which need not have a geometric interpretation. For example, the set of (real) polynomials forms a vector space.

Formal definition

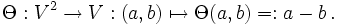

Let F be a field (such as the real numbers or complex numbers), whose elements will be called scalars. A vector space over the field F is a set V together with two binary operations,

- vector addition: V × V → V denoted v + w, where v, w ∈ V, and

- scalar multiplication: F × V → V denoted av, where a ∈ F and v ∈ V,

satisfying the axioms below. Four of the axioms require vectors under addition to form an abelian group, and two are distributive laws.

- Vector addition is associative:

For all u, v, w ∈ V, we have u + (v + w) = (u + v) + w.

- Vector addition is commutative:

For all v, w ∈ V, we have v + w = w + v.

- Vector addition has an identity element:

There exists an element 0 ∈ V, called the zero vector, such that v + 0 = v for all v ∈ V.

- Vector addition has inverse elements:

For all v ∈ V, there exists an element w ∈ V, called the additive inverse of v, such that v + w = 0.

- Distributivity holds for scalar multiplication over vector addition:

For all a ∈ F and v, w ∈ V, we have a (v + w) = a v + a w.

- Distributivity holds for scalar multiplication over field addition:

For all a, b ∈ F and v ∈ V, we have (a + b) v = a v + b v.

- Scalar multiplication is compatible with multiplication in the field of scalars:

For all a, b ∈ F and v ∈ V, we have a (b v) = (ab) v.

- Scalar multiplication has an identity element:

For all v ∈ V, we have 1 v = v, where 1 denotes the multiplicative identity in F.

Formally, these are the axioms for a module, so a vector space may be concisely described as a module over a field.

Note that the seventh axiom above, stating a (b v) = (ab) v, is not asserting the associativity of an operation, since there are two operations in question, scalar multiplication: b v; and field multiplication: ab.

Some sources choose to also include two axioms of closure:

- V is closed under vector addition:

If u, v ∈ V, then u + v ∈ V.

- V is closed under scalar multiplication:

If a ∈ F, v ∈ V, then a v ∈ V.

However, the modern formal understanding of the operations as maps with codomain V implies these statements by definition, and thus obviates the need to list them as independent axioms. The validity of closure axioms is key to determining whether a subset of a vector space is a subspace.

Note that expressions of the form “v a”, where v ∈ V and a ∈ F, are, strictly speaking, not defined. Because of the commutativity of the underlying field, however, “a v” and “v a” are often treated synonymously. Additionally, if v ∈ V, w ∈ V, and a ∈ F where vector space V is additionally an algebra over the field F then a v w = v a w, which makes it convenient to consider “a v” and “v a” to represent the same vector.

Elementary properties

There are a number of properties that follow easily from the vector space axioms.

- The zero vector 0 ∈ V is unique:

If 01 and 02 are zero vectors in V, such that 01 + v = v and 02 + v = v for all v ∈ V, then 01 = 02 = 0.

- Scalar multiplication with the zero vector yields the zero vector:

For all a ∈ F, we have a 0 = 0.

- Scalar multiplication by zero yields the zero vector:

For all v ∈ V, we have 0 v = 0, where 0 denotes the additive identity in F.

- No other scalar multiplication yields the zero vector:

We have a v = 0 if and only if a = 0 or v = 0.

- The additive inverse −v of a vector v is unique:

If w1 and w2 are additive inverses of v ∈ V, such that v + w1 = 0 and v + w2 = 0, then w1 = w2. We call the inverse −v and define w − v ≡ w + (−v).

- Scalar multiplication by negative unity yields the additive inverse of the vector:

For all v ∈ V, we have (−1) v = −v, where 1 denotes the multiplicative identity in F.

- Negation commutes freely:

For all a ∈ F and v ∈ V, we have (−a) v = a (−v) = − (a v).

Examples

Subspaces and bases

Main articles: Linear subspace, Basis

Given a vector space V, a nonempty subset W of V that is closed under addition and scalar multiplication is called a subspace of V. Subspaces of V are vector spaces (over the same field) in their own right. The intersection of all subspaces containing a given set of vectors is called its span; if no vector can be removed without changing the span, the set is said to be linearly independent. A linearly independent set whose span is V is called a basis for V.

Using Zorn’s Lemma (which is equivalent to the axiom of choice), it can be proven that every vector space has a basis. It follows from the ultrafilter lemma, which is weaker than the axiom of choice, that all bases of a given vector space have the same cardinality. Thus vector spaces over a given field are fixed up to isomorphism by a single cardinal number (called the dimension of the vector space) representing the size of the basis. For instance, the real finite-dimensional vector spaces are just R0, R1, R2, R3, …. The dimension of the real vector space R3 is three.

It was F. Hausdorff who first proved that every vector space has a basis. Andreas Blass showed this theorem leads to the axiom of choice.

A basis makes it possible to express every vector of the space as a unique tuple of the field elements, although caution must be exercised when a vector space does not have a finite basis. Vector spaces are sometimes introduced from this coordinatised viewpoint.

One often considers vector spaces which also carry a compatible topology. Compatible here means that addition and scalar multiplication should be continuous operations. This requirement actually ensures that the topology gives rise to a uniform structure. When the dimension is infinite, there is generally more than one inequivalent topology, which makes the study of topological vector spaces richer than that of general vector spaces.

Only in such topological vector spaces can one consider infinite sums of vectors, i.e. series, through the notion of convergence. This is of importance in both pure- and applied mathematics, for instance in quantum mechanics, where physical systems are defined as Hilbert spaces, or where Fourier expansions are used.

Linear maps

Main article: Linear map

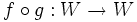

Given two vector spaces V and W over the same field F, one can define linear maps or “linear transformations” from V to W. These are functions f:V → W that are compatible with the relevant structure — i.e., they preserve sums and scalar products. The set of all linear maps from V to W, denoted HomF (V, W), is also a vector space over F. When bases for both V and W are given, linear maps can be expressed in terms of components as matrices.

An isomorphism is a linear map  such that there exists an inverse map

such that there exists an inverse map  such that

such that  and

and  are identity maps. A linear map that is both one-to-one ( injective) and onto ( surjective) is necessarily an isomorphism. If there exists an isomorphism between V and W, the two spaces are said to be isomorphic; they are then essentially identical as vector spaces.

are identity maps. A linear map that is both one-to-one ( injective) and onto ( surjective) is necessarily an isomorphism. If there exists an isomorphism between V and W, the two spaces are said to be isomorphic; they are then essentially identical as vector spaces.

The vector spaces over a fixed field F together with the linear maps are a category, indeed an abelian category.

Generalizations

From an abstract point of view, vector spaces are modules over a field, F. The common practice of identifying a v and v a in a vector space makes the vector space an F-F bimodule. Modules in general need not have bases; those that do (including all vector spaces) are known as free modules.

A family of vector spaces, parametrised continuously by some underlying topological space, is a vector bundle.

An affine space is a set with a transitive vector space action. Note that a vector space is an affine space over itself, by the structure map

Additional structures

It is common to study vector spaces with certain additional structures. This is often necessary for recovering ordinary notions from geometry.

- A real or complex vector space with a well-defined concept of length, i.e., a norm, is called a normed vector space.

- A normed vector space with the additional well-defined concept of angle is called an inner product space.

- A vector space with a topology compatible with the operations — such that addition and scalar multiplication are continuous maps — is called a topological vector space. The topological structure is relevant when the underlying vector space is infinite dimensional.

- A vector space with an additional bilinear operator defining the multiplication of two vectors is an algebra over a field.

- An ordered vector space.